×

PUBLICATIONS

2025 | Dynamic Adaptation of the Event Transmission for Online Processing

Revista Iberoamericana de Automática e Informática Industrial

CITE

PDF

The advantages of event cameras over traditional cameras in terms of latency, dynamic range, and robustness to movement andvibrations have generated significant interest in their use for robotics applications. However, the event generation rates of camerasonboard mobile robots navigating in complex, unstructured, and dynamic environments can experience strong fluctuations. Thesefluctuations can lead to overflows in the robots' onboard processing capacity and even result in losses of responsiveness of the robotperception system. This article presents a method for handling the transmission of an event camera to achieve dynamic adaptation,ensuring the perception system's responsiveness and preventing computational overloads. The proposed method consists of twomechanisms: the first prevents processing overflows by discarding information, and the second dynamically adjusts the size ofevent packets to reduce the delay between generation and processing. The proposed solution has been validated in experimentsperformed in testbenches and onboard an aerial robot.

@article{tapia2025dynamic,

author={Tapia, Raul and Martínez-de Dios, José Ramiro and Ollero, Anibal},

journal={Revista Iberoamericana de Automática e Informática Industrial},

title={Dynamic Adaptation of the Event Transmission for Online Processing},

year={2025},

month={June},

volume={22},

number={4},

issn={1697-7912},

editorial={Universitat Politècnica de València},

pages={265--275},

doi={10.4995/riai.2025.23152}

}

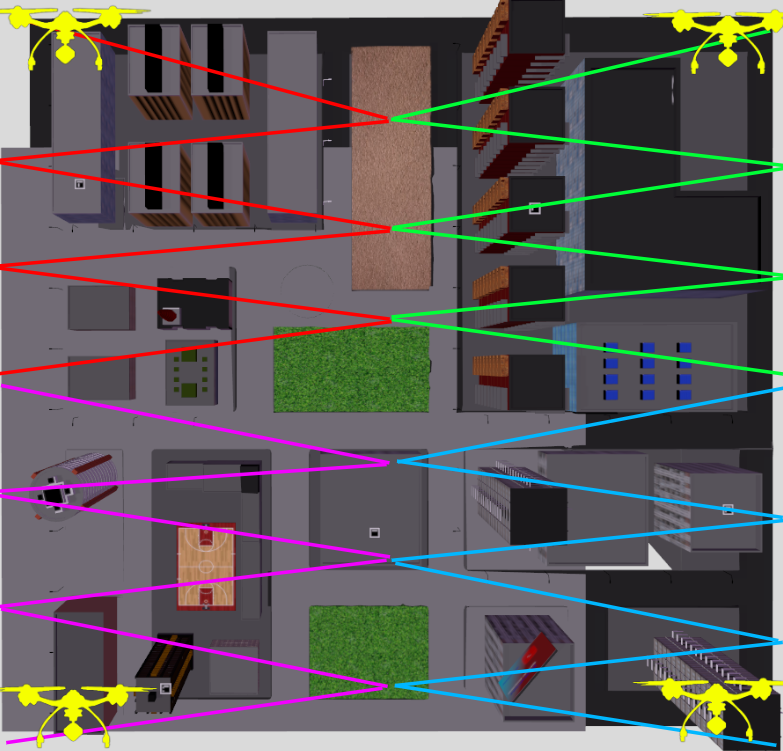

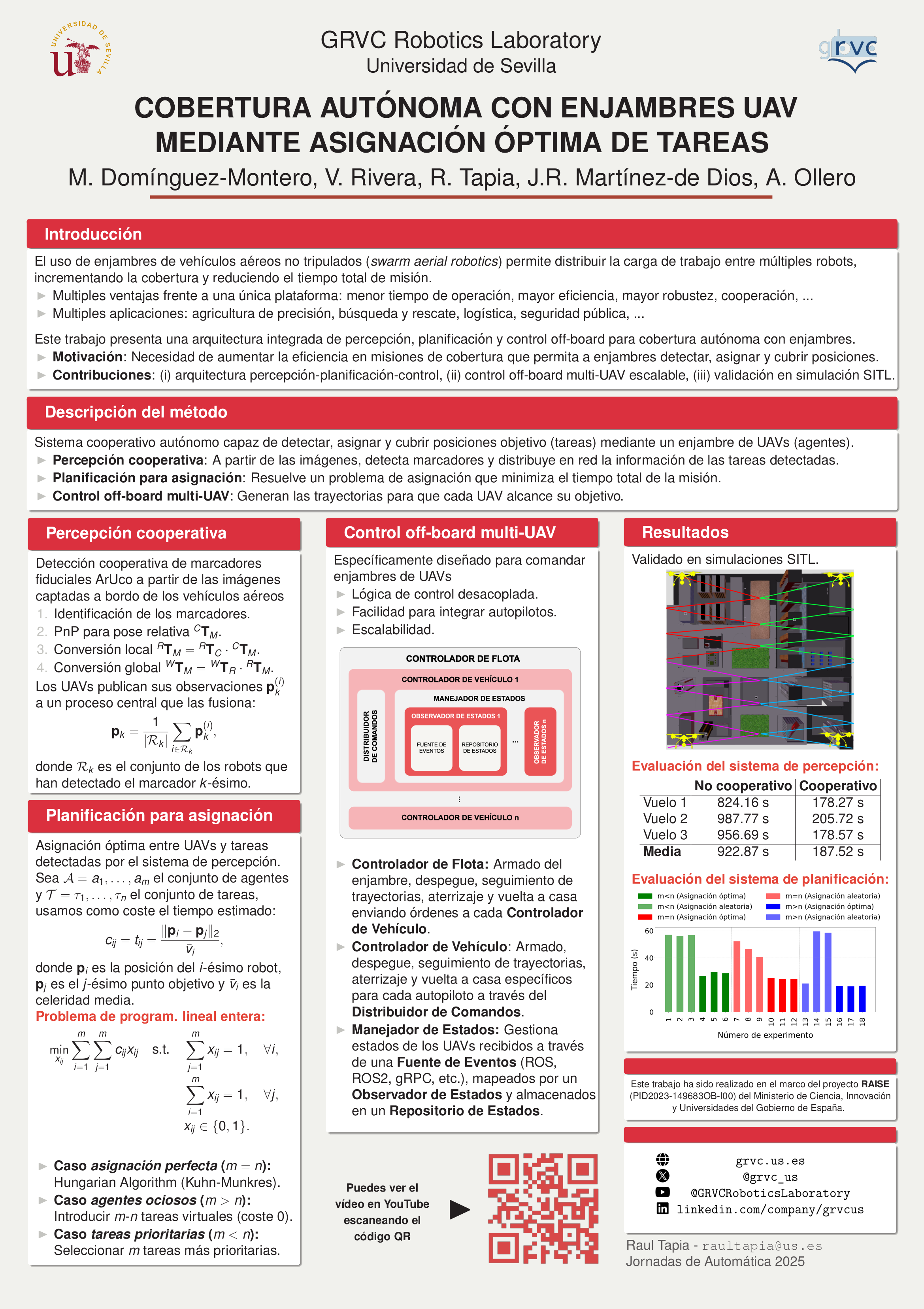

2025 | Autonomous Coverage with UAV Swarms using Optimal Task Assignment

Jornadas de Automática

CITE

PDF

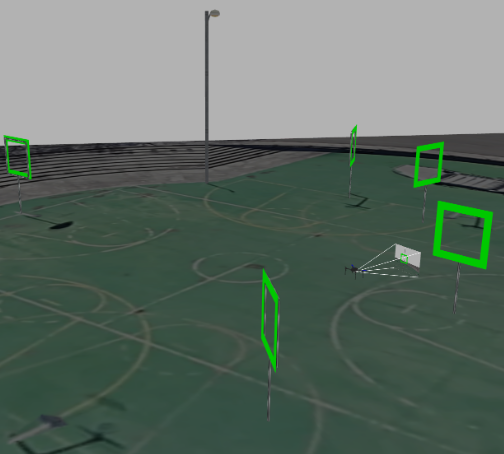

POSTER

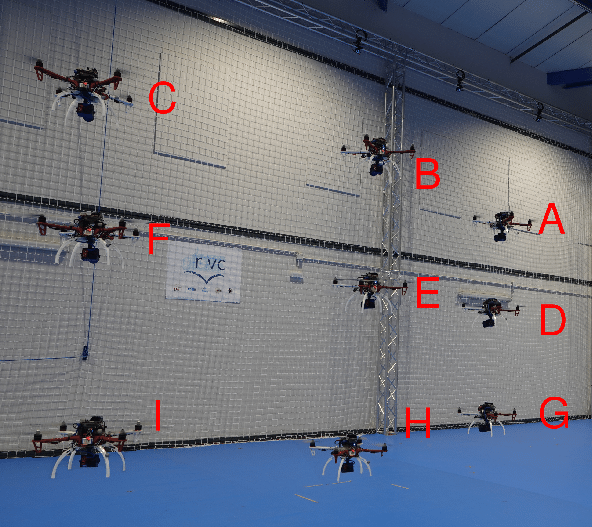

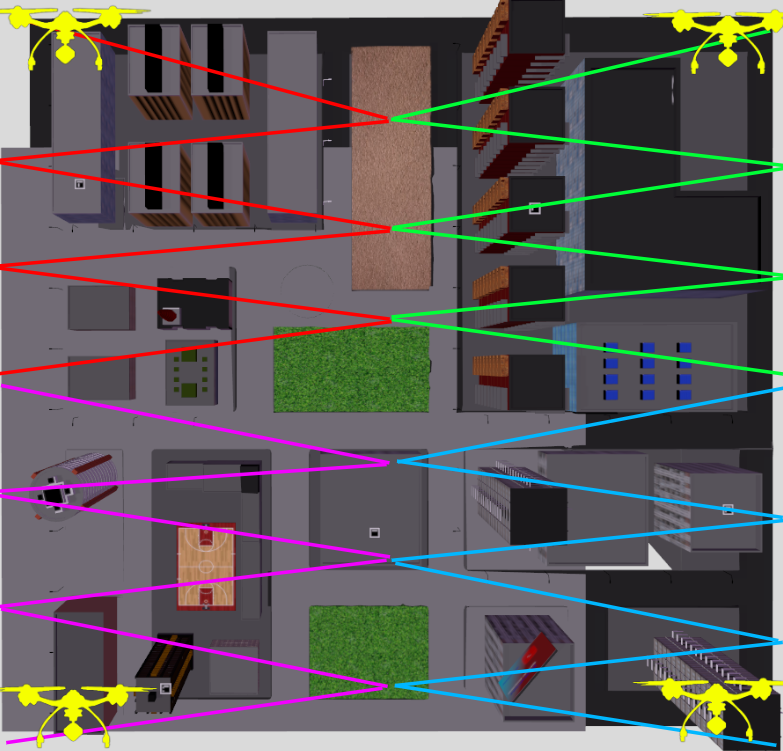

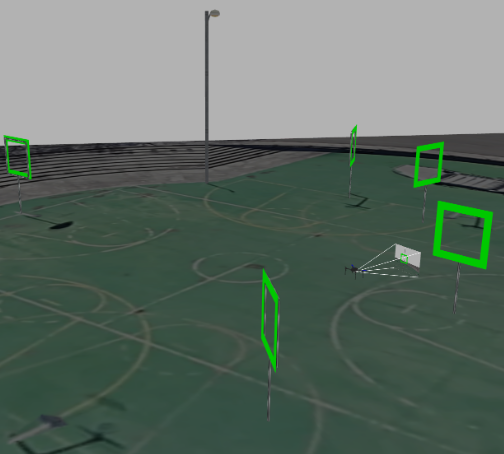

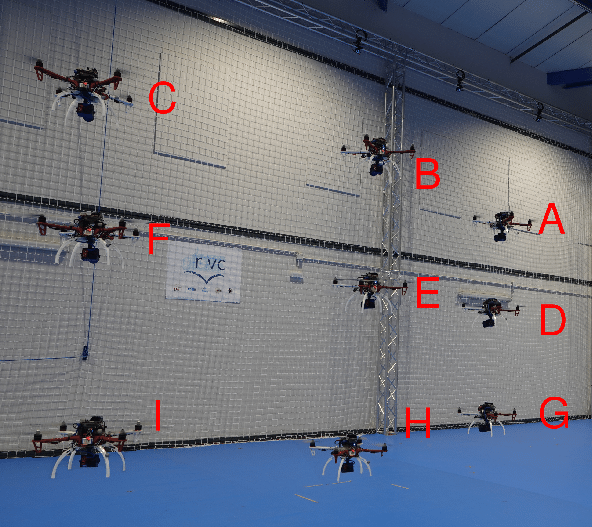

This work presents a cooperative perception-planning-navigation system that enables swarms of unmanned aerial vehicles (UAVs) to autonomously cover a set of target positions represented by visual markers. The perception system fuses distributed observations to more accurately estimate the location of each task, while the planner solves a linear programming problem to obtain the optimal UAV-task assignment. Finally, a multi-vehicle off-board control module generates the trajectories and executes the arming, takeoff, tracking, and landing operations. Experimental validation was carried out in a Software-In-The-Loop (SITL) simulation using PX4 and Gazebo. The results confirm that UAV cooperation significantly improves the efficiency and robustness of aerial coverage missions.

@inproceedings{dominguez2025autonomous,

author={Domínguez-Montero, Manuel and Rivera, Víctor and Tapia, Raul and Martínez-de Dios, José Ramiro and Ollero, Anibal},

booktitle={Jornadas de Automática},

title={Autonomous Coverage with UAV Swarms using Optimal Task Assignment},

year={2025},

month={September},

pages={1--5},

doi={10.17979/ja-cea.2025.46.12090}

}

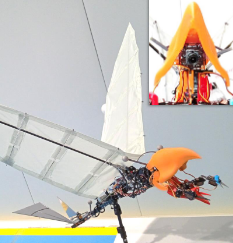

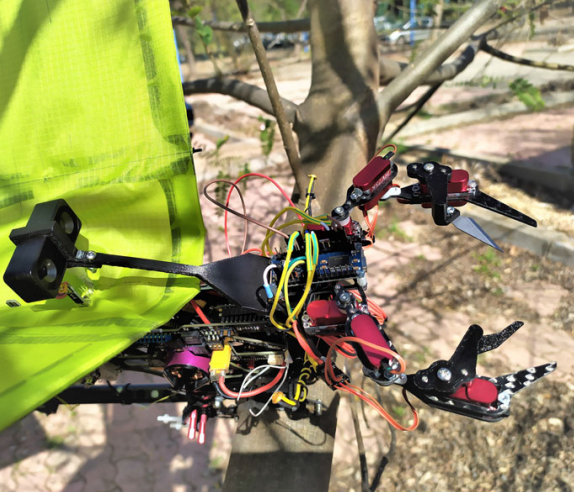

2025 | Flapping-Wing Flying Robot with Integrated Dual-Arm Scissors-Type Flora Sampling System

IEEE International Conference on Robotics and Automation

CITE

PDF

VIDEO

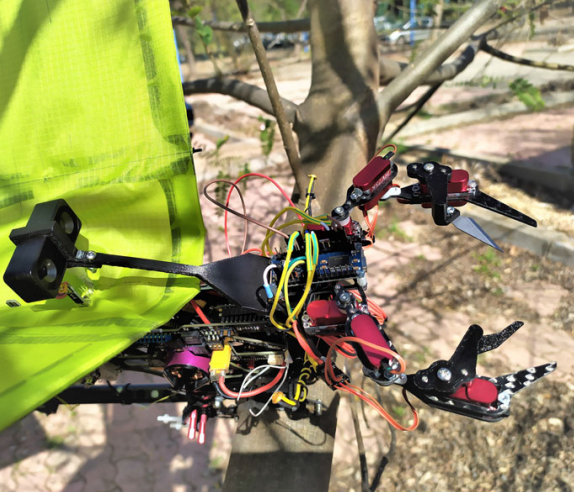

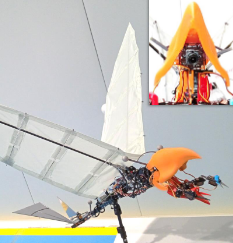

The flapping-wing robotic birds were inspired by nature to present an alternative way of thrust and lift generation instead of conventional high-speed rotary propellers in unmanned aerial platforms. The advances in flapping technology recently led to the prototyping of leg-claw mechanisms for perching and occasionally very lightweight arms for sampling or tiny object aerial manipulation. A dual-arm manipulator on top of a robotic bird might not be bio-inspired and safe in case of a collision with the environment or human-robot interaction. Here in this work, the previously designed dual-arm scissors-type manipulator has been improved in terms of workspace, mechanism, vision system, and blade placement to present a more natural way of sampling. The new dual-arm, with 100.2(g) weight, is redesigned inside a beak to have protection against possible collisions and also secure the cutting blades within a protected shield. During the flight, the dual-arm system is inside the cover and invisible; the lower beak is opened before manipulation and sets out the arm in a proper place for sampling. This new safety cover (beak) along with the new blade mechanism enhanced the cutting power and the safety of the operation. The experimental results show the successful cutting of a series of plant samples.

@inproceedings{gordillo2025flapping,

author={Gordillo Durán, Rodrigo and Tapia, Raul and Nekoo, Saeed Rafee and Martínez-de Dios, José Ramiro and Ollero, Anibal},

booktitle={IEEE International Conference on Robotics and Automation},

title={Flapping-Wing Flying Robot with Integrated Dual-Arm Scissors-Type Flora Sampling System},

year={2025},

month={September},

pages={7512--7518},

doi={10.1109/ICRA55743.2025.11128106}

}

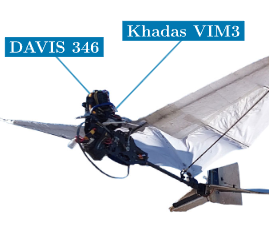

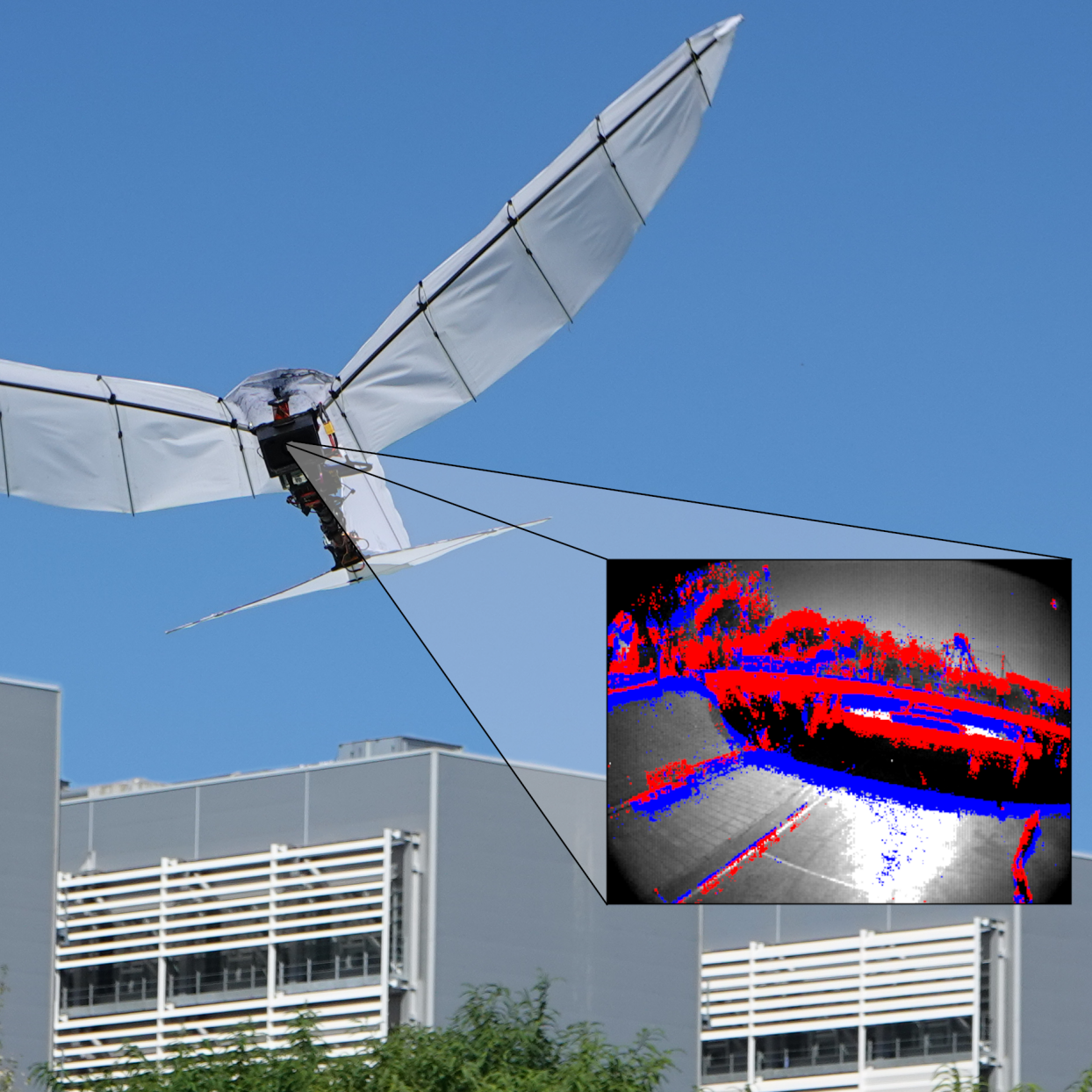

2025 | Flight of the Future: An Experimental Analysis of Event‐Based Vision for Online Perception Onboard Flapping‐Wing Robots

Advanced Intelligent Systems

CITE

PDF

DATASET

PRESS

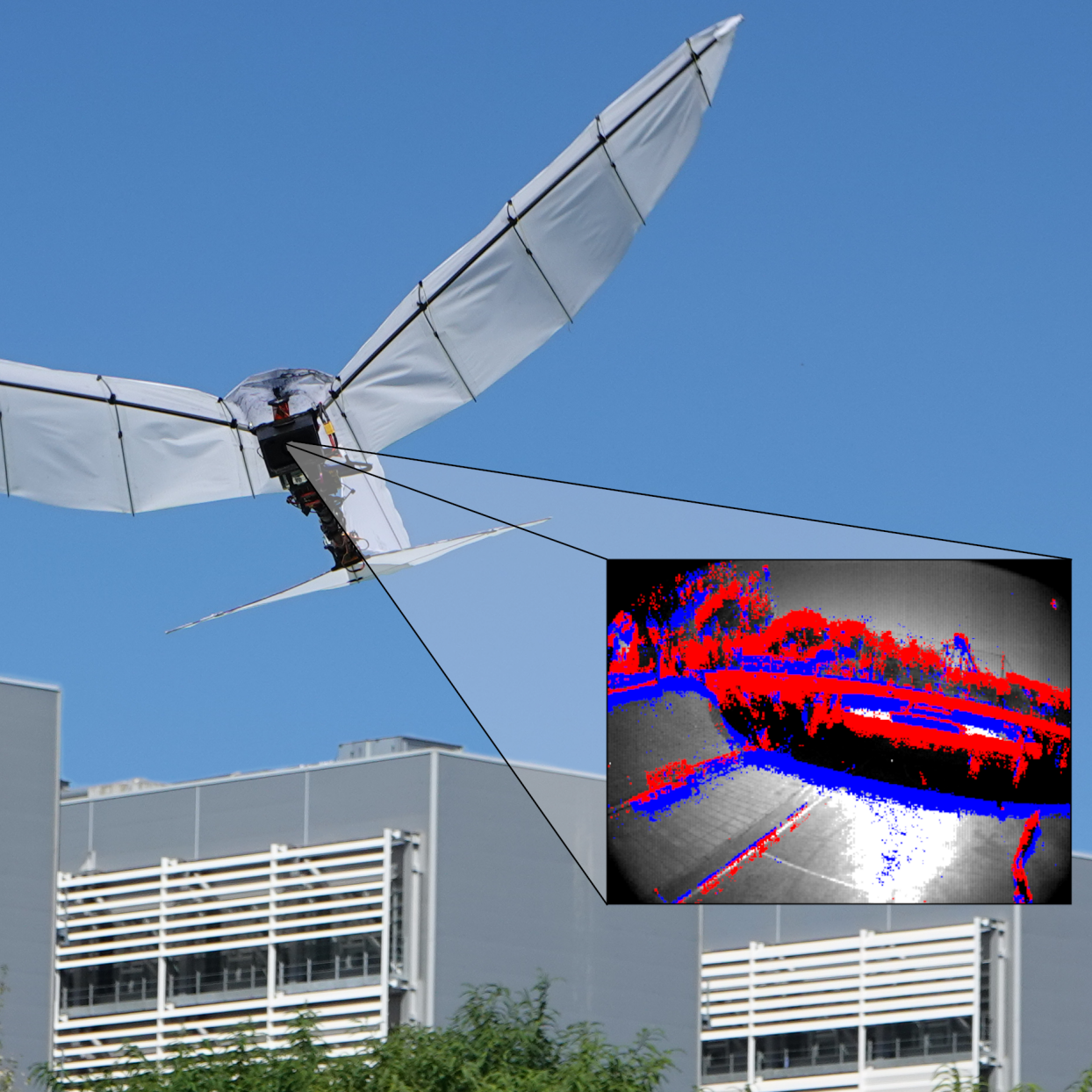

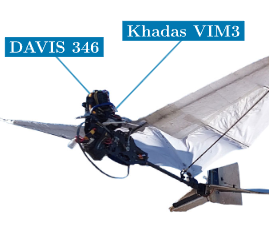

Inspired by bird flight, flapping-wing robots have gained significant attention due to their high maneuverability and energy efficiency. However, the development of their perception systems faces several challenges, mainly related to payload restrictions and the effects of flapping strokes on sensor data. The limited resources of lightweight onboard processors further constrain the online processing required for autonomous flight. Event cameras exhibit several properties suitable for ornithopter perception, such as low latency, robustness to motion blur, high dynamic range, and low power consumption. This article explores the use of event-based vision for online processing onboard flapping-wing robots. First, the suitability of event cameras under flight conditions is assessed through experimental tests. Second, the integration of event-based vision systems onboard flapping-wing robots is analyzed. Finally, the performance, accuracy, and computational cost of some widely used event-based vision algorithms are experimentally evaluated when integrated into flapping-wing robots flying in indoor and outdoor scenarios under different conditions. The results confirm the benefits and suitability of event-based vision for online perception onboard ornithopters, paving the way for enhanced autonomy and safety in real-world flight operations.

@article{tapia2025flight,

author={Tapia, Raul and Luna-Santamaria, Javier and Gutierrez Rodriguez, Ivan and Rodríguez-Gómez, Juan Pablo and Martínez-de Dios, José Ramiro and Ollero, Anibal},

journal={Advanced Intelligent Systems},

title={Flight of the Future: An Experimental Analysis of Event‐Based Vision for Online Perception Onboard Flapping‐Wing Robots},

year={2025},

month={March},

volume={0},

number={0},

issn={2640-4567},

editorial={Wiley},

pages={0--0},

doi={10.1002/aisy.202401065}

}

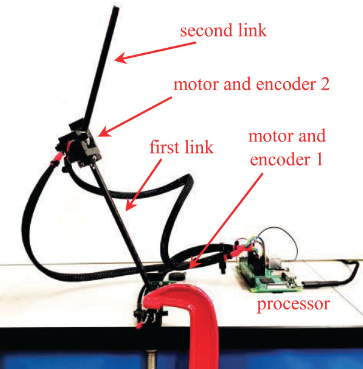

2025 | Practical Fractional-Order Terminal Sliding-Mode Control for a Class of Continuous-Time Nonlinear Systems

Transactions of the Institute of Measurement and Control

CITE

PDF

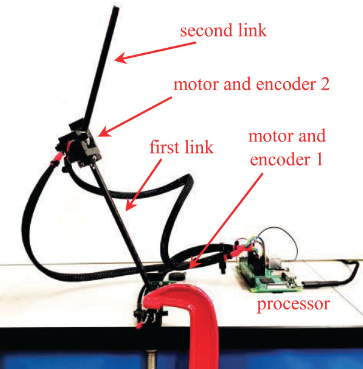

Terminal sliding-mode control (TSMC) has been studied and applied extensively in the last few years. This technique gives rise to a robust control with tunable finite-time convergence, providing a fast and accurate response in the presence of parameter uncertainties. In that sense, we present a closed-loop robust controller for a class of nonlinear systems using a terminal sliding-mode controller, with a novel fractional-order sliding surface. The motivation of this work is to consider a time-varying gain for the fractional-order sliding surface that is relatively small at the beginning of the regulation control task to reduce the amplitude of the control input and it increases near the final time to reduce the error in the steady state. Unlike using a constant gain in the conventional terminal sliding surface, the time-varying gain keeps the amplitude of the signal low and enhances the precision simultaneously. An extensive simulation study shows the performance of the proposed design. Finally, the experimental implementation of the fractional-order TSMC in a lightweight (90 g) two-degree-of-freedom manipulator is presented. The implementation used C++ programming language on a Raspberry Pi digital board and a chattering-free modification was presented to address the practical limitation of the actuators.

@article{feliu2025practical,

author={Feliu-Talegón, Daniel and Nekoo, Saeed Rafee and Tapia, Raul and Acosta, José Ángel and Ollero, Anibal},

journal={Transactions of the Institute of Measurement and Control},

title={Practical Fractional-Order Terminal Sliding-Mode Control for a Class of Continuous-Time Nonlinear Systems},

year={2025},

month={January},

volume={0},

number={0},

issn={1477-0369},

editorial={SAGE},

pages={0--0},

doi={10.1177/01423312241286352}

}

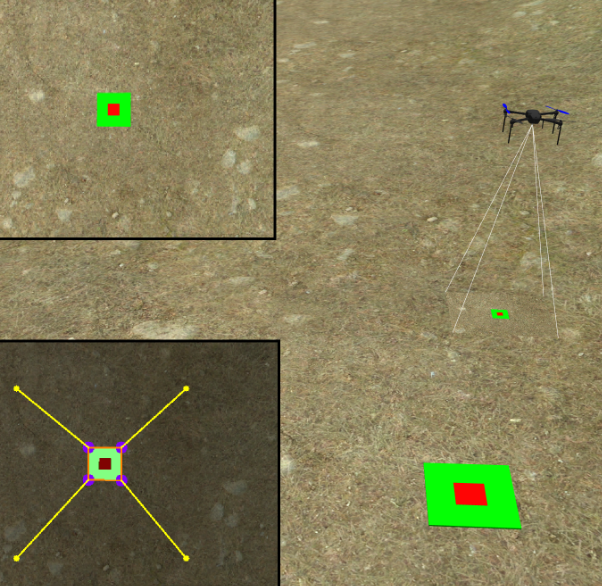

2024 | Autonomous Guidance of an Aerial Robot using 6-DoF Visual Control and Behavior Trees

ROBOT 2024: Iberian Robotics Conference

CITE

PDF

The use of unmanned aerial vehicles in inspection and maintenance tasks reduces risks to human operators, in-creases the efficiency and quality of operations, and reduces the associated economic costs. Accurate navigation is crucial for automating these missions. This paper introduces an autonomous guidance scheme for aerial robots based on behavior trees that combine Position-Based Visual Servoing (PBVS) and waypoint following. The behavior tree dynamically adapts the robot navigation strategy depending on the required level of accuracy, accommodating to different types of missions and the presence of obstacles. As a result, the proposed navigation scheme enables complex missions to be performed in tight environments with significant levels of safety and robustness. All functional modules have been integrated into a behavior tree architecture, which provides robust, reactive, modular, and flexible frameworks to handle high-level tasks in complex missions. Validation using extensive software-in-the-loop simulations has demonstrated the robustness and accurate performance of the method, confirming its potential for real-world applications. An open-source implementation of our method is released under the GNU GPL3 license.

@inproceedings{tapia2024autonomous,

author={Tapia, Raul and Gil-Castilla, Miguel and Martínez-de Dios, José Ramiro and Ollero, Anibal},

booktitle={ROBOT 2024: Iberian Robotics Conference},

title={Autonomous Guidance of an Aerial Robot using 6-DoF Visual Control and Behavior Trees},

year={2024},

month={November},

pages={1--6},

doi={10.1109/ROBOT61475.2024.10797354}

}

2024 | Combining Route Planning and Visual Servoing for Offshore Wind-Turbine Inspection Using UAVs

ROBOT 2024: Iberian Robotics Conference

CITE

PDF

Offshore wind turbines are crucial assets in the generation of sustainable energy, but their location makes the inspection complex. In this context, UAVs (Unmanned Aerial Vehicles) can help to automatize the inspection process by reducing costs, risks and operation time. This paper combines route planning and visual servoing for the fast and efficient inspection of offshore wind turbines using UAVs. The route planner encodes the inspection problem into a graph and, over this, integer linear programming is used to obtain the optimal sequence of inspection points. However, differences between the expected and the actual positions of the wind turbines might appear and the information collected by the UAV after simply executing the inspection plan might be useless. To cope with that, a visual control method allows the UAV to track the wind turbine. The method relies on a partitioned image-based visual servoing approach to keep the wind turbine in the field of view of the camera as the UAV moves between inspection points. Simulation results under realistic conditions demonstrate the benefits of the proposed approach.Offshore wind turbines are crucial assets in the generation of sustainable energy, but their location makes the inspection complex. In this context, UAVs (Unmanned Aerial Vehicles) can help to automatize the inspection process by reducing costs, risks and operation time. This paper combines route planning and visual servoing for the fast and efficient inspection of offshore wind turbines using UAVs. The route planner encodes the inspection problem into a graph and, over this, integer linear programming is used to obtain the optimal sequence of inspection points. However, differences between the expected and the actual positions of the wind turbines might appear and the information collected by the UAV after simply executing the inspection plan might be useless. To cope with that, a visual control method allows the UAV to track the wind turbine. The method relies on a partitioned image-based visual servoing approach to keep the wind turbine in the field of view of the camera as the UAV moves between inspection points. Simulation results under realistic conditions demonstrate the benefits of the proposed approach.

@inproceedings{caballero2024combining,

author={Caballero, Alvaro and Tapia, Raul and Ollero, Anibal},

booktitle={ROBOT 2024: Iberian Robotics Conference},

title={Combining Route Planning and Visual Servoing for Offshore Wind-Turbine Inspection Using UAVs},

year={2024},

month={November},

pages={1--6},

doi={10.1109/ROBOT61475.2024.10796917}

}

2024 | Modular Framework for Autonomous Waypoint Following and Landing based on Behavior Trees

ROBOT 2024: Iberian Robotics Conference

CITE

PDF

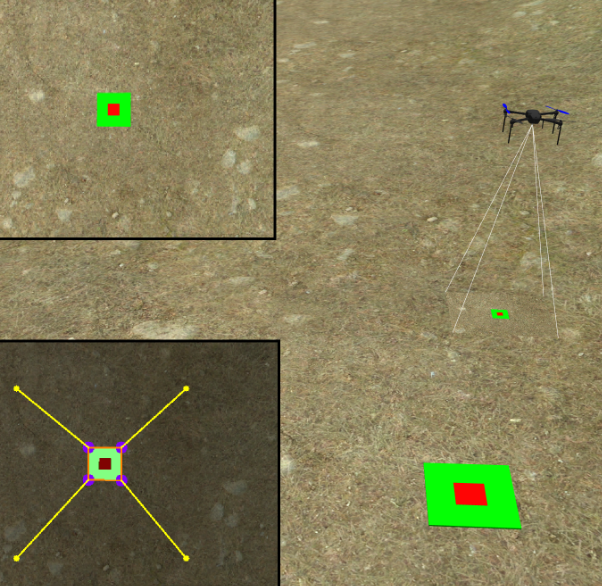

Autonomous navigation and landing are critical capabilities for modern unmanned aerial vehicles (UAVs), particularly in complex and dynamic environments. In this paper, we propose a modular framework that employs behavior trees to achieve reliable waypoint following and landing. Behavior trees offer a flexible and easily extensible method of managing autonomous behaviors, enabling the integration of various algorithms and sensors. Our framework is designed to enhance the robustness and flexibility of UAV navigation and landing procedures. Experimental results from Software In The Loop (SITL) simulation tests demonstrate the system's robustness and adaptability, showcasing its potential for a wide range of UAV applications. This work contributes to the advancement of autonomous UAV technology by providing a scalable and efficient solution for mission-critical operations.

@inproceedings{gil2024modular,

author={Gil-Castilla, Miguel and Tapia, Raul and Maza, Ivan and Ollero, Anibal},

booktitle={ROBOT 2024: Iberian Robotics Conference},

title={Modular Framework for Autonomous Waypoint Following and Landing based on Behavior Trees},

year={2024},

month={November},

pages={1--6},

doi={10.1109/ROBOT61475.2024.10797361}

}

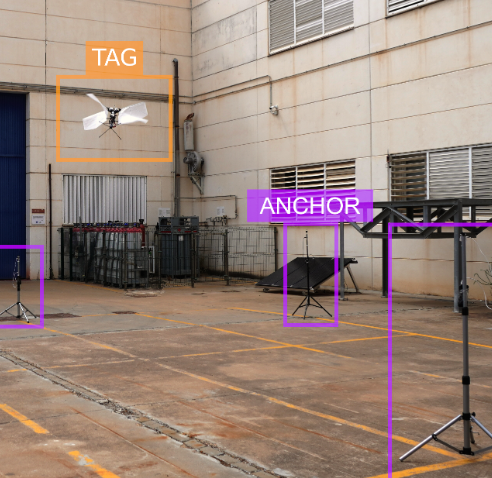

2024 | Range-Only Localization System for Small-Scale Flapping-Wing Robots

40th Anniversary of the IEEE Conference on Robotics and Automation

CITE

PDF

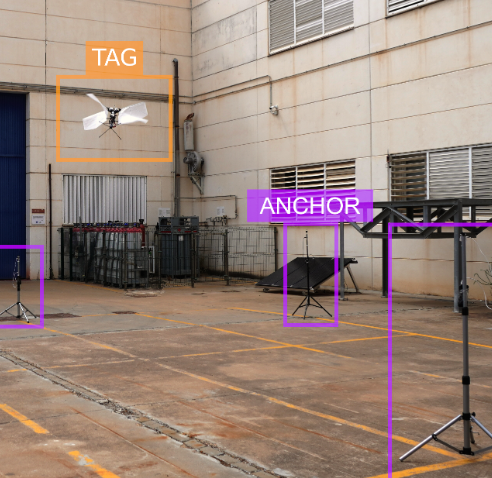

The design of localization systems for small-scale flapping-wing aerial robots faces relevant challenges caused by the limited payload and onboard computational resources. This paper presents an ultra-wideband localization system particularly designed for small-scale flapping-wing robots. The solution relies on custom 5 grams ultra-wideband sensors and provides robust, very efficient (in terms of both computation and energy consumption), and accurate (mean error of 0.28 meters) 3D position estimation. We validate our system using a Flapper Nimble+ flapping-wing robot.

@inproceedings{tapia2024range,

author={Tapia, Raul and Rodríguez, Iván G. and Luna-Santamaría, Javier and Martínez-de Dios, José Ramiro and Ollero, Anibal},

booktitle={40th Anniversary of the IEEE Conference on Robotics and Automation},

title={Range-Only Localization System for Small-Scale Flapping-Wing Robots},

year={2024},

month={September},

pages={1--2},

doi={10.48550/arXiv.2501.01213}

}

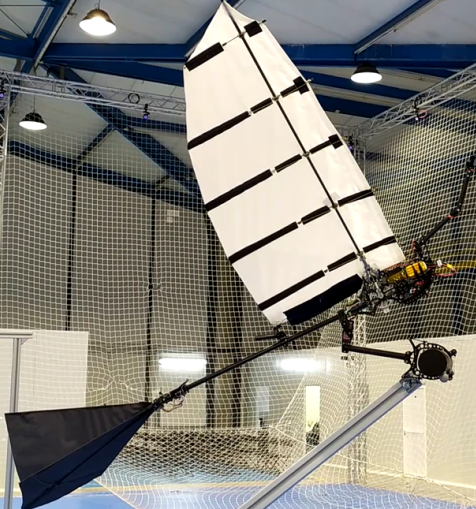

2024 | Rescaling of a Flapping-wing Aerial Vehicle for Flights in Confined Spaces

Jornadas de Automática

CITE

PDF

POSTER

This paper presents the rescaling of a flapping-wing aerial robot. Our objective is to design a platform that enables auto-nomous flights in indoor and outdoor confined areas. A previous model has been rescaled using more lightweight parts. Theaerodynamic design includes a new airfoil (S1221) that improves the efficiency. In addition, significant modification have beenperformed in the mechanical and electronic designs to reduce the weight by using more lightweight materials and smallercomponents. The preliminary results suggest our prototype fulfill the weight and wing-loading constraints, providing a highmaniobrability.

@inproceedings{coca2024rescaling,

author={Coca, Sara and Crassous, Pablo and Sanchez-Laulhe, Ernesto and Tapia, Raul and Martínez-de Dios, José Ramiro and Ollero, Anibal},

booktitle={Jornadas de Automática},

title={Rescaling of a Flapping-wing Aerial Vehicle for Flights in Confined Spaces},

year={2024},

month={September},

pages={1--4},

doi={10.17979/ja-cea.2024.45.10914}

}

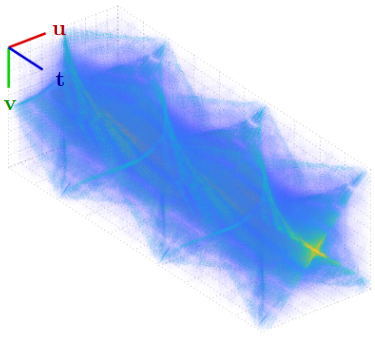

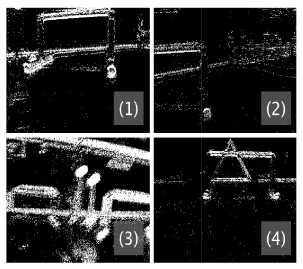

2024 | eFFT: An Event-based Method for the Efficient Computation of Exact Fourier Transforms

IEEE Transactions on Pattern Analysis and Machine Intelligence

CITE

PDF

CODE

PRESS

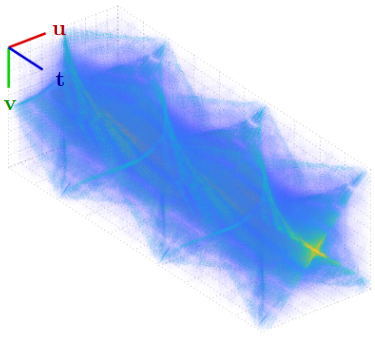

We introduce eFFT, an efficient method for the calculation of the exact Fourier transform of an asynchronous event stream. It is based on keeping the matrices involved in the Radix-2 FFT algorithm in a tree data structure and updating them with the new events, extensively reusing computations, and avoiding unnecessary calculations while preserving exactness. eFFT can operate event-by-event, requiring for each event only a partial recalculation of the tree since most of the stored data are reused. It can also operate with event packets, using the tree structure to detect and avoid unnecessary and repeated calculations when integrating the different events within each packet to further reduce the number of operations. eFFT has been extensively evaluated with public datasets and experiments, validating its exactness, low processing time, and feasibility for online execution on resource-constrained hardware. We release a C++ implementation of eFFT to the community.

@article{tapia2024efft,

author={Tapia, Raul and Martínez-de Dios, José Ramiro and Ollero, Anibal},

journal={IEEE Transactions on Pattern Analysis and Machine Intelligence},

title={eFFT: An Event-based Method for the Efficient Computation of Exact Fourier Transforms},

year={2024},

month={July},

volume={46},

number={12},

issn={1939-3539},

editorial={IEEE},

pages={9630--9647},

doi={10.1109/TPAMI.2024.3422209}

}

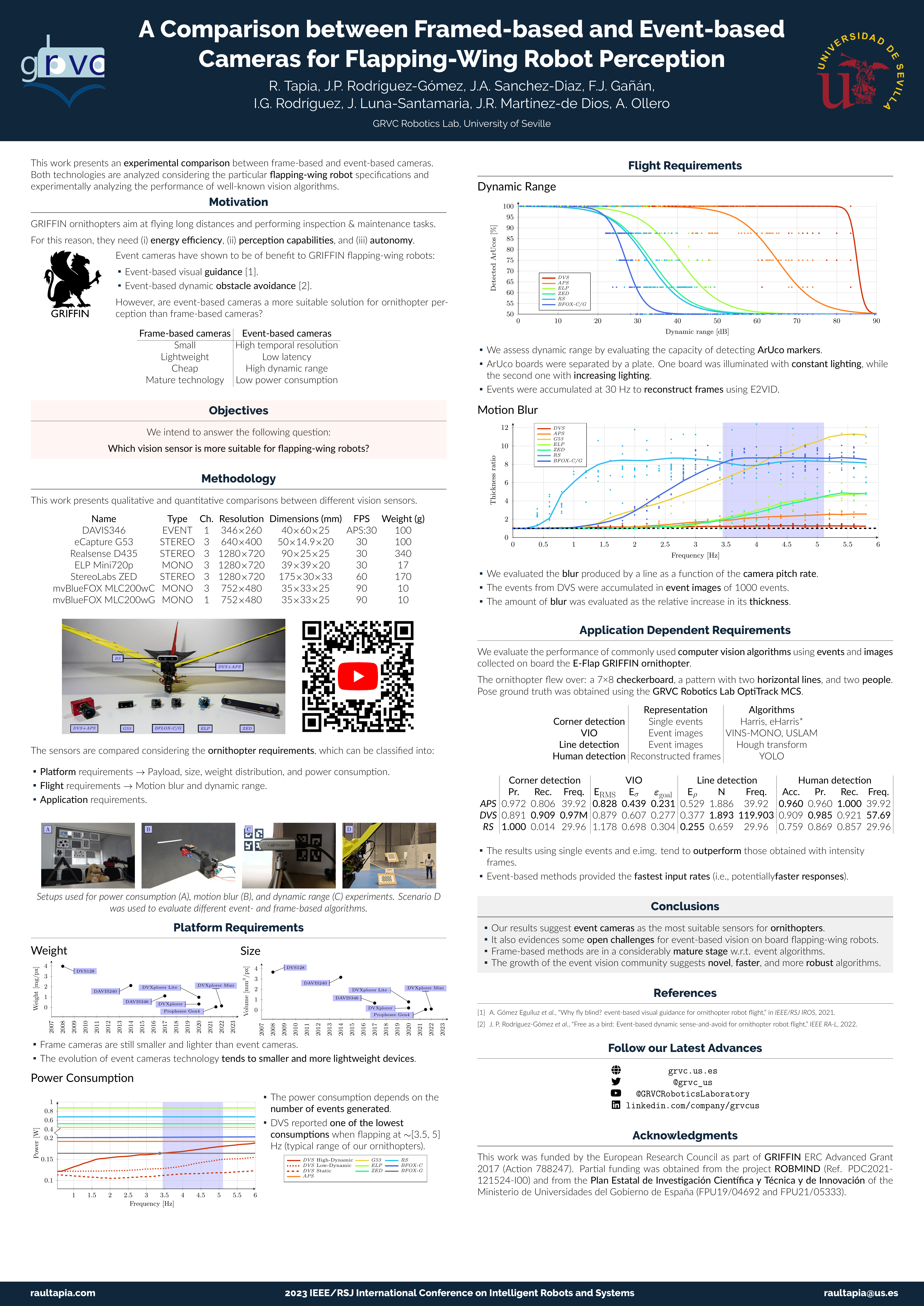

2023 | A Comparison between Frame-based and Event-based Cameras for Flapping-Wing Robot Perception

IEEE/RSJ International Conference on Intelligent Robots and Systems

CITE

PDF

PREPRINT

POSTER

VIDEO

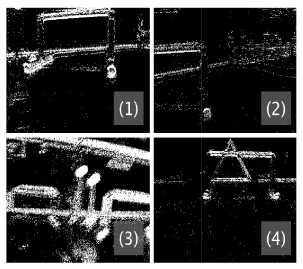

Perception systems for ornithopters face severe challenges. The harsh vibrations and abrupt movements caused during flapping are prone to produce motion blur and strong lighting condition changes. Their strict restrictions in weight, size, and energy consumption also limit the type and number of sensors to mount onboard. Lightweight traditional cameras have become a standard off-the-shelf solution in many flapping-wing designs. However, bioinspired event cameras are a promising solution for ornithopter perception due to their microsecond temporal resolution, high dynamic range, and low power consumption. This paper presents an experimental comparison between frame-based and an event-based camera. Both technologies are analyzed considering the particular flapping-wing robot specifications and also experimentally analyzing the performance of well-known vision algorithms with data recorded onboard a flapping-wing robot. Our results suggest event cameras as the most suitable sensors for ornithopters. Nevertheless, they also evidence the open challenges for event-based vision on board flapping-wing robots.

@inproceedings{tapia2023comparison,

author={Tapia, Raul and Rodríguez-Gómez, Juan Pablo and Sanchez-Diaz, Juan Antonio and Gañán, Francisco Javier and Gutierrez Rodriguez, Ivan and Luna-Santamaria, Javier and Martínez-de Dios, José Ramiro and Ollero, Anibal},

booktitle={IEEE/RSJ International Conference on Intelligent Robots and Systems},

title={A Comparison between Frame-based and Event-based Cameras for Flapping-Wing Robot Perception},

year={2023},

month={October},

pages={3025--3032},

doi={10.1109/IROS55552.2023.10342500}

}

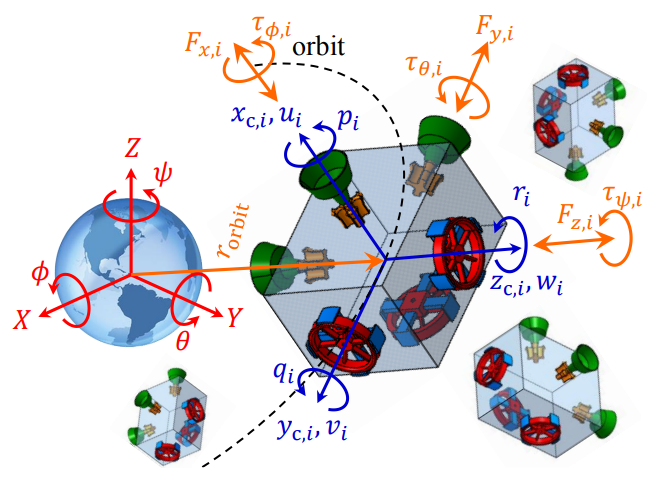

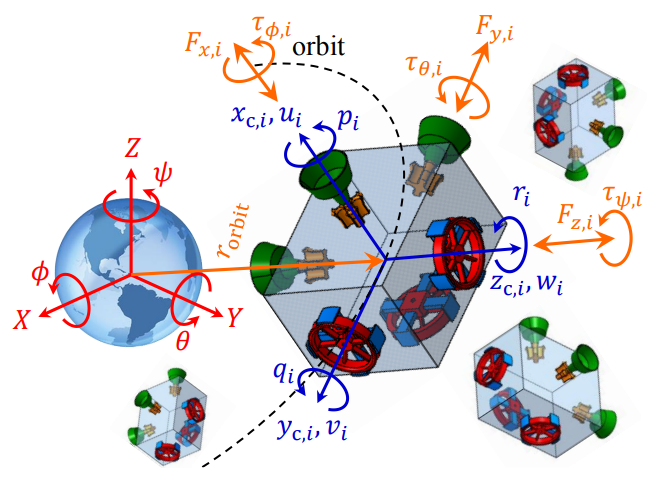

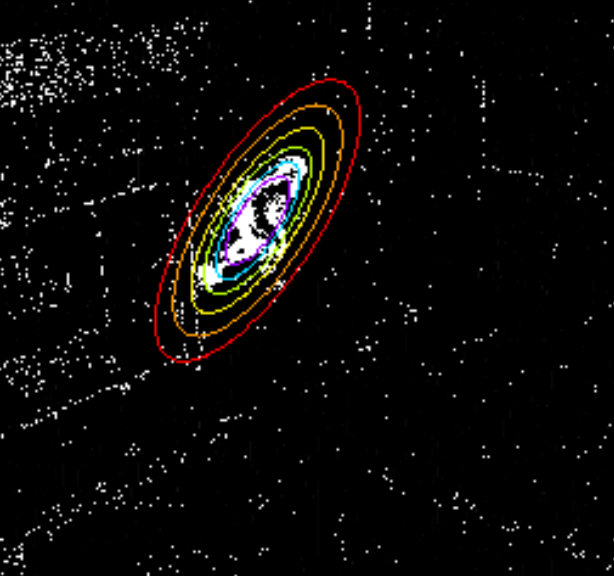

2023 | Leader-Follower Formation Control of a Large-Scale Swarm of Satellite System Using the State-Dependent Riccati Equation: Orbit-to-Orbit and In-Same-Orbit Regulation

IEEE/RSJ International Conference on Intelligent Robots and Systems

CITE

PDF

The state-dependent Riccati equation (SDRE) is a nonlinear optimal controller with a flexible structure which is one of the main advantages of this method. Here in this work, this flexibility is used to present a novel design for handling a soft constraint for state variables (trajectories). The concept is applied to a large-scale swarm control system, with more than 1000 agents. The control of the swarm satellite system is devoted to two modes of orbit-to-orbit and in-same-orbit cases. Keeping the satellites in one orbit in regulation (point-to-point motion) requires additional constraints while they are moving in Cartesian coordinates. For a small number of agents trajectory design could be done for each satellite individually, though, for a swarm with many agents, that is not practical. The constraint has been incorporated into the cost function of optimal control and resulted in a modified SDRE control law. The proposed method successfully controlled a swarm case of 1024 agents in leader-follower mode for orbit-to-orbit and in-same-orbit simulations. The soft constraint presented a percentage of 0.05 in the error of the satellites with respect to travel distance, in in-same-orbit regulation. The presented approach is systematic and could be performed for larger swarm systems with different agents and dynamics.

@inproceedings{nekoo2023leader,

author={Nekoo, Saeed Rafee and Yao, Jie and Suarez, Alejandro and Tapia, Raul and Ollero, Anibal},

booktitle={IEEE/RSJ International Conference on Intelligent Robots and Systems},

title={Leader-Follower Formation Control of a Large-Scale Swarm of Satellite System Using the State-Dependent Riccati Equation: Orbit-to-Orbit and In-Same-Orbit Regulation},

year={2023},

month={October},

pages={10700--10707},

doi={10.1109/IROS55552.2023.10342383}

}

2023 | Experimental Energy Consumption Analysis of a Flapping-Wing Robot

IEEE International Conference on Robotics and Automation. Workshop on Energy Efficient Aerial Robotic Systems

CITE

PDF

PREPRINT

One of the motivations for exploring flapping-wing aerial robotic systems is to seek energy reduction, by maintaining manoeuvrability, compared to conventional unmanned aerial systems. A Flapping Wing Flying Robot (FWFR) can glide in favourable wind conditions, decreasing energy consumption significantly. In addition, it is also necessary to investigate the power consumption of the components in the flapping-wing robot. In this work, two sets of the FWFR components are analyzed in terms of power consumption: a) motor/electronics components and b) a vision system for monitoring the environment during the flight. A measurement device is used to record the power utilization of the motors in the launching and ascending phases of the flight and also in cruising flight around the desired height. Additionally, an analysis of event cameras and stereo vision systems in terms of energy consumption has been performed. The results provide a first step towards decreasing battery usage and, consequently, providing additional flight time.

@inproceedings{tapia2023experimental,

author={Tapia, Raul and Satue, Alvaro Cesar and Nekoo, Saeed Rafee and Martínez-de Dios, José Ramiro and Ollero, Anibal},

booktitle={IEEE International Conference on Robotics and Automation. Workshop on Energy Efficient Aerial Robotic Systems},

title={Experimental Energy Consumption Analysis of a Flapping-Wing Robot},

year={2023},

month={May},

pages={1--4},

doi={10.48550/arXiv.2306.00848}

}

2023 | A 94.1 g Scissors-type Dual-arm Cooperative Manipulator for Plant Sampling by an Ornithopter using a Vision Detection System

Robotica

CITE

PDF

VIDEO

The sampling and monitoring of nature have become an important subject due to the rapid loss of green areas. This work proposes a possible solution for a sampling method of the leaves using an ornithopter robot equipped with an onboard 94.1 g dual-arm cooperative manipulator. One hand of the robot is a scissors-type arm and the other one is a gripper to perform the collection, approximately similar to an operation by human fingers. In the move toward autonomy, a stereo camera has been added to the ornithopter to provide visual feedback for the stem, which reports the position of the cutting and grasping. The position of the stem is detected by a stereo vision processing system and the inverse kinematics of the dual-arm commands both gripper and scissors to the right position. Those trajectories are smooth and avoid any damage to the actuators. The real-time execution of the vision algorithm takes place in the lightweight main processor of the ornithopter which sends the estimated stem localization to a microcontroller board that controls the arms. The experimental results both indoors and outdoors confirmed the feasibility of this sampling method. The operation of the dual-arm manipulator is done after the perching of the system on a stem. The topic of perching has been presented in previous works and here we focus on the sampling procedure and vision/manipulator design. The flight experimentation also approves the weight of the dual-arm system for installation on the flapping-wing flying robot.

@article{nekoo2023scissors,

author={Nekoo, Saeed Rafee and Feliu-Talegon, Daniel and Tapia, Raul and Satue, Alvaro Cesar and Martínez-de Dios, José Ramiro and Ollero, Anibal},

journal={Robotica},

title={A 94.1 g Scissors-type Dual-arm Cooperative Manipulator for Plant Sampling by an Ornithopter using a Vision Detection System},

year={2023},

month={October},

volume={41},

number={10},

issn={1469-8668},

editorial={Cambridge University Press},

pages={3022--3039},

doi={10.1017/S0263574723000851}

}

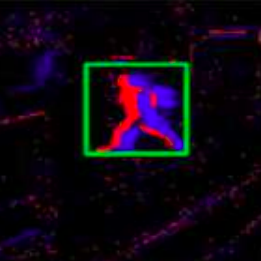

2022 | Efficient Event-based Intrusion Monitoring using Probabilistic Distributions

IEEE International Symposium on Safety, Security, and Rescue Robotics

CITE

PDF

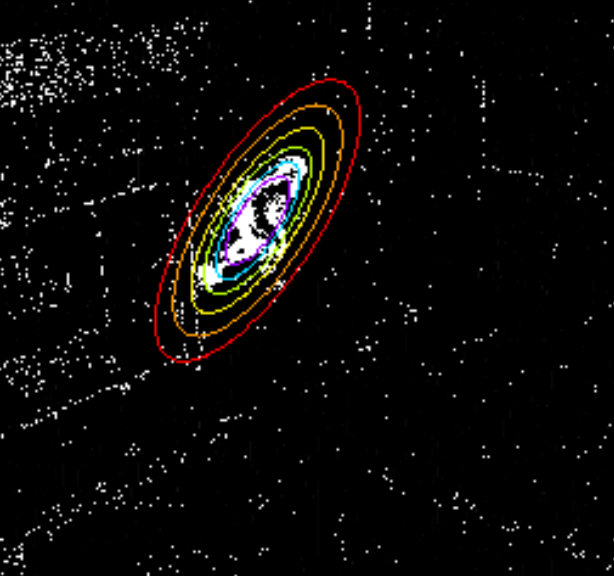

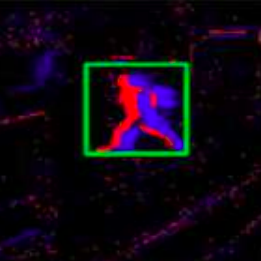

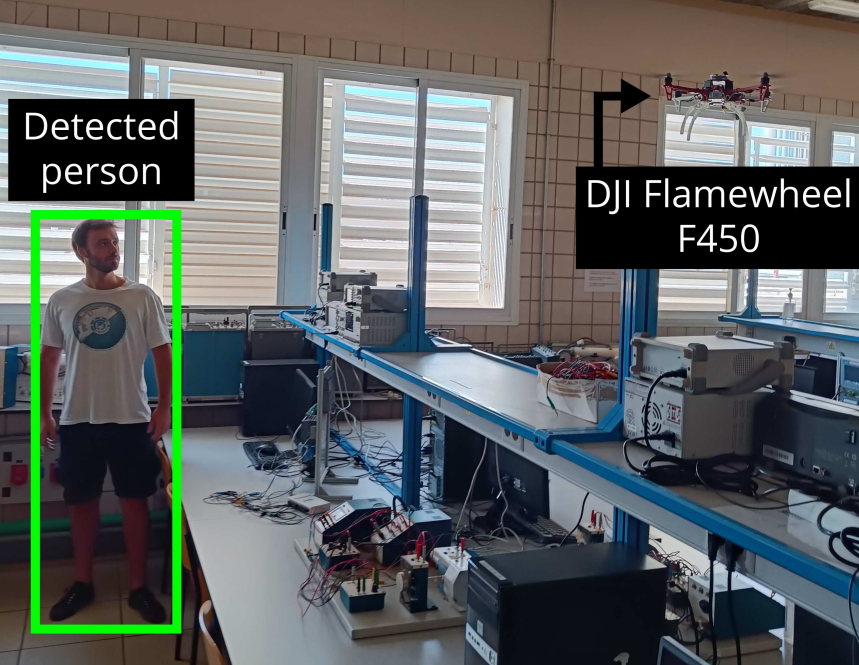

lighting conditions, among others. Event cameras are neuromorphic sensors that capture per-pixel illumination changes, providing low latency and high dynamic range. This paper presents an efficient event-based processing scheme for intrusion detection and tracking onboard strict resourceconstrained robots. The method tracks moving objects using a probabilistic distribution that is updated event by event, but the processing of each event involves few low-cost operations, enabling online execution on resource-constrained onboard computers. The method has been experimentally validated in several real scenarios under different lighting conditions, evidencing its accurate performance.

@inproceedings{ganan2022efficient,

author={Gañán, Francisco Javier and Sanchez-Diaz, Juan Antonio and Tapia, Raul and Martínez-de Dios, José Ramiro and Ollero, Anibal},

booktitle={IEEE International Symposium on Safety, Security, and Rescue Robotics},

title={Efficient Event-based Intrusion Monitoring using Probabilistic Distributions},

year={2022},

month={November},

pages={211--216},

doi={10.1109/SSRR56537.2022.10018655}

}

2022 | Scene Recognition for Urban Search and Rescue using Global Description and Semi-Supervised Labelling

IEEE International Symposium on Safety, Security, and Rescue Robotics

CITE

PDF

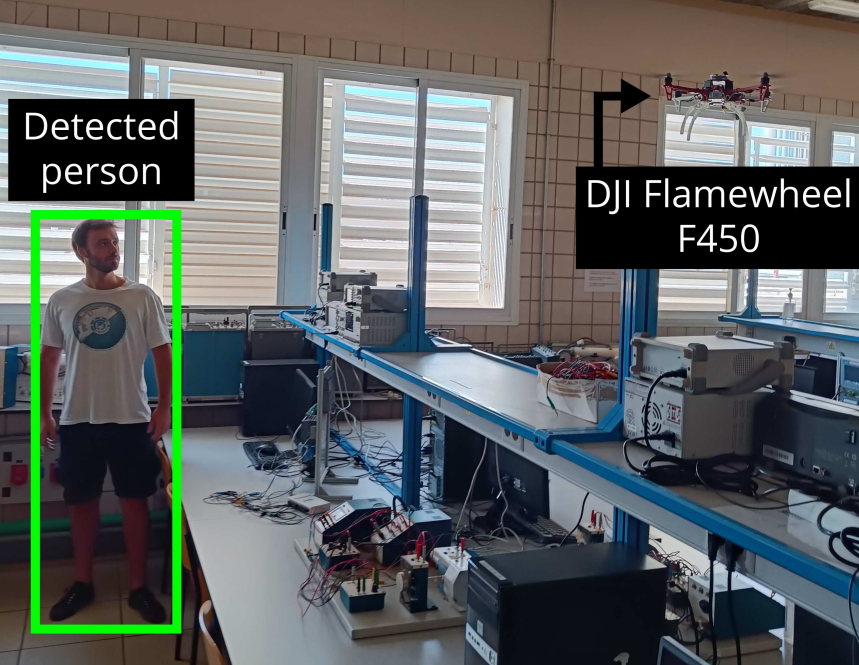

Autonomous aerial robots for urban search and rescue (USAR) operations require robust perception systems for localization and mapping. Although local feature description is widely used for geometric map construction, global image descriptors leverage scene information to perform semantic localization, allowing topological maps to consider relations between places and elements in the scenario. This paper proposes a scene recognition method for USAR operations using a collaborative human-robot approach. The proposed method uses global image description to train an SVM-based classification model with semi-supervised labeled data. It has been experimentally validated in several indoor scenarios on board a multirotor robot.

@inproceedings{sanchez2022scene,

author={Sanchez-Diaz, Juan Antonio and Gañán, Francisco Javier and Tapia, Raul and Martínez-de Dios, José Ramiro and Ollero, Anibal},

booktitle={IEEE International Symposium on Safety, Security, and Rescue Robotics},

title={Scene Recognition for Urban Search and Rescue using Global Description and Semi-Supervised Labelling},

year={2022},

month={November},

pages={238--243},

doi={10.1109/SSRR56537.2022.10018660}

}

2022 | Aerial Manipulation System for Safe Human-Robot Handover in Power Line Maintenance

Robotics: Science and Systems. Workshop in Close Proximity Human-Robot Collaboration

CITE

PDF

VIDEO

Human workers conducting inspection and maintenance (I&M) operations on high altitude infrastructures like power lines or industrial facilities face significant difficulties getting tools or devices once they are deployed on this kind of workspaces. In this sense, aerial manipulation robots can be employed to deliver quickly objects to the operator, considering long reach configurations to improve safety and the feeling of comfort for the operator during the handover. This paper presents a dual arm aerial manipulation robot in cable suspended configuration intended to conduct fast and safe aerial delivery, considering a human-centered approach relying on an on-board perception system in which the aerial robot accommodates its pose to the worker. Preliminary experimental results in an indoor testbed validate the proposed system design.

@inproceedings{ganan2022aerial,

author={Gañán, Francisco Javier and Suarez, Alejandro and Tapia, Raul and Martínez-de Dios, José Ramiro and Ollero, Anibal},

booktitle={Robotics: Science and Systems. Workshop in Close Proximity Human-Robot Collaboration},

title={Aerial Manipulation System for Safe Human-Robot Handover in Power Line Maintenance},

year={2022},

month={July},

pages={1--4},

doi={10.5281/zenodo.7153329}

}

2022 | ASAP: Adaptive Transmission Scheme for Online Processing of Event-Based Algorithms

Autonomous Robots

CITE

PDF

PREPRINT

CODE

Online event-based perception techniques on board robots navigating in complex, unstructured, and dynamic environments can suffer unpredictable changes in the incoming event rates and their processing times, which can cause computational overflow or loss of responsiveness. This paper presents ASAP: a novel event handling framework that dynamically adapts the transmission of events to the processing algorithm, keeping the system responsiveness and preventing overflows. ASAP is composed of two adaptive mechanisms. The first one prevents event processing overflows by discarding an adaptive percentage of the incoming events. The second mechanism dynamically adapts the size of the event packages to reduce the delay between event generation and processing. ASAP has guaranteed convergence and is flexible to the processing algorithm. It has been validated on board a quadrotor and an ornithopter robot in challenging conditions.

@article{tapia2022asap,

author={Tapia, Raul and Martínez-de Dios, José Ramiro and Gómez Eguíluz, Augusto and Ollero, Anibal},

journal={Autonomous Robots},

title={ASAP: Adaptive Transmission Scheme for Online Processing of Event-Based Algorithms},

year={2022},

month={September},

volume={46},

number={8},

issn={1573-7527},

editorial={Springer},

pages={879--892},

doi={10.1007/s10514-022-10051-y}

}

2022 | Free as a Bird: Event-Based Dynamic Sense-and-Avoid for Ornithopter Robot Flight

IEEE Robotics and Automation Letters

CITE

PDF

VIDEO

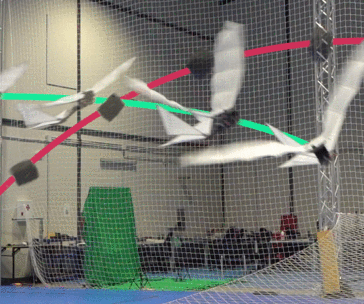

Autonomous flight of flapping-wing robots is a major challenge for robot perception. Most of the previous senseand-avoid works have studied the problem of obstacle avoidance for flapping-wing robots considering only static obstacles. This paper presents a fully onboard dynamic sense-and-avoid scheme for large-scale ornithopters using event cameras. These sensors trigger pixel information due to changes of illumination in the scene such as those produced by dynamic objects. The method performs event-by-event processing in low-cost hardware such as those onboard small aerial vehicles. The proposed scheme detects obstacles and evaluates possible collisions with the robot body. The onboard controller actuates over the horizontal and vertical tail deflections to execute the avoidance maneuver. The scheme is validated in both indoor and outdoor scenarios using obstacles of different shapes and sizes. To the best of the authors' knowledge, this is the first event-based method for dynamic obstacle avoidance in a flapping-wing robot.

@article{rodriguez2022free,

author={Rodríguez-Gómez, Juan Pablo and Tapia, Raul and Guzmán Garcia, María Mar and Martínez-de Dios, José Ramiro and Ollero, Anibal},

journal={IEEE Robotics and Automation Letters},

title={Free as a Bird: Event-Based Dynamic Sense-and-Avoid for Ornithopter Robot Flight},

year={2022},

month={April},

volume={7},

number={2},

issn={2377-3766},

editorial={IEEE},

pages={5413--5420},

doi={10.1109/LRA.2022.3153904}

}

2021 | UAV Human Teleoperation using Event-Based and Frame-Based Cameras

Aerial Robotic Systems Physically Interacting with the Environment

CITE

PDF

Teleoperation is a crucial aspect for human-robot interaction with unmanned aerial vehicles (UAVs) applications. Fast perception processing is required to ensure robustness, precision, and safety. Event cameras are neuromorphic sensors that provide low latency response, high dynamic range and low power consumption. Although classical image-based methods have been extensively used for human-robot interaction tasks, responsiveness is limited by their processing rates. This paper presents a human-robot teleoperation scheme for UAVs that exploits the advantages of both traditional and event cameras. The proposed scheme was tested in teleoperation missions where the pose of a multirotor robot is controlled in real time using human gestures detected from events.

@inproceedings{rodriguez2021uav,

author={Rodríguez-Gómez, Juan Pablo and Tapia, Raul and Gómez Eguíluz, Augusto and Martínez-de Dios, José Ramiro and Ollero, Anibal},

booktitle={Aerial Robotic Systems Physically Interacting with the Environment},

title={UAV Human Teleoperation using Event-Based and Frame-Based Cameras},

year={2021},

month={October},

pages={1--5},

doi={10.1109/AIRPHARO52252.2021.9571049}

}

2021 | Why Fly Blind? Event-based Visual Guidance for Ornithopter Robot Flight

IEEE/RSJ International Conference on Intelligent Robots and Systems

CITE

PDF

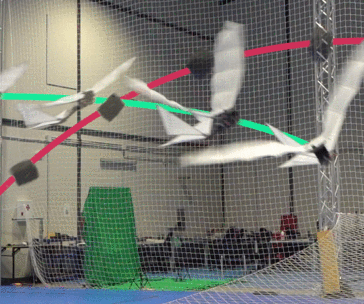

VIDEO

The development of perception and control methods that allow bird-scale flapping-wing robots (a.k.a. ornithopters) to perform autonomously is an under-researched area. This paper presents a fully onboard event-based method for ornithopter robot visual guidance. The method uses event cameras to exploit their fast response and robustness against motion blur in order to feed the ornithopter control loop at high rates (100 Hz). The proposed scheme visually guides the robot using line features extracted in the event image plane and controls the flight by actuating over the horizontal and vertical tail deflections. It has been validated on board a real ornithopter robot with real-time computation in low-cost hardware. The experimental evaluation includes sets of experiments with different maneuvers indoors and outdoors.

@inproceedings{gomez2021why,

author={Gómez Eguíluz, Augusto and Rodríguez-Gómez, Juan Pablo and Tapia, Raul and Maldonado, Francisco Javier and Acosta, José Ángel and Martínez-de Dios, José Ramiro and Ollero, Anibal},

booktitle={IEEE/RSJ International Conference on Intelligent Robots and Systems},

title={Why Fly Blind? Event-based Visual Guidance for Ornithopter Robot Flight},

year={2021},

month={September},

pages={1958--1965},

doi={10.1109/IROS51168.2021.9636315}

}

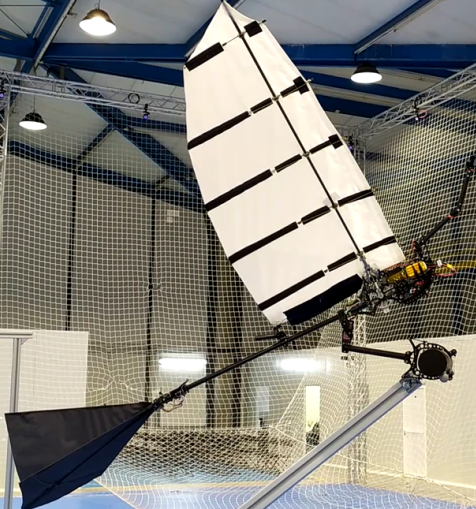

2021 | The GRIFFIN Perception Dataset: Bridging the Gap Between Flapping-Wing Flight and Robotic Perception

IEEE Robotics and Automation Letters

CITE

PDF

CODE

DATASET

VIDEO

The development of automatic perception systems and techniques for bio-inspired flapping-wing robots is severely hampered by the high technical complexity of these platforms and the installation of onboard sensors and electronics. Besides, flapping-wing robot perception suffers from high vibration levels and abrupt movements during flight, which cause motion blur and strong changes in lighting conditions. This paper presents a perception dataset for bird-scale flapping-wing robots as a tool to help alleviate the aforementioned problems. The presented data include measurements from onboard sensors widely used in aerial robotics and suitable to deal with the perception challenges of flapping-wing robots, such as an event camera, a conventional camera, and two Inertial Measurement Units (IMUs), as well as ground truth measurements from a laser tracker or a motion capture system. A total of 21 datasets of different types of flights were collected in three different scenarios (one indoor and two outdoor). To the best of the authors' knowledge this is the first dataset for flapping-wing robot perception.

@article{rodriguez2021griffin,

author={Rodríguez-Gómez, Juan Pablo and Tapia, Raul and Paneque, Julio L. and Grau, Pedro and Gómez Eguíluz, Augusto and Martínez-de Dios, José Ramiro and Ollero, Anibal},

journal={IEEE Robotics and Automation Letters},

title={The GRIFFIN Perception Dataset: Bridging the Gap Between Flapping-Wing Flight and Robotic Perception},

year={2021},

month={April},

volume={6},

number={2},

issn={2377-3766},

editorial={IEEE},

pages={1066--1073},

doi={10.1109/LRA.2021.3056348}

}

2020 | Towards UAS Surveillance using Event Cameras

IEEE International Symposium on Safety, Security, and Rescue Robotics

CITE

PDF

Aerial robot perception for surveillance and search and rescue in unstructured and complex environments poses challenging problems in which traditional sensors are severely constrained. This paper analyzes the use of event cameras onboard aerial robots for surveillance applications. Event cameras have high temporal resolution and dynamic range, which make them very robust against motion blur and lighting conditions. The paper analyzes the pros and cons of event cameras and presents an event-based processing scheme for target detection and tracking. The scheme is experimentally validated in challenging environments and different lighting conditions.

@inproceedings{martinez2020towards,

author={Martínez-de Dios, José Ramiro and Gómez Eguíluz, Augusto and Rodríguez-Gómez, Juan Pablo and Tapia, Raul and Ollero, Anibal},

booktitle={IEEE International Symposium on Safety, Security, and Rescue Robotics},

title={Towards UAS Surveillance using Event Cameras},

year={2020},

month={November},

pages={71--76},

doi={10.1109/SSRR50563.2020.9292606}

}

2020 | ASAP: Adaptive Scheme for Asynchronous Processing of Event-Based Vision Algorithms

IEEE International Conference on Robotics and Automation. Workshop on Unconventional Sensors in Robotics

CITE

PDF

PREPRINT

CODE

Event cameras can capture pixel-level illumination changes with very high temporal resolution and dynamic range. They have received increasing research interest due to their robustness to lighting conditions and motion blur. Two main approaches exist in the literature to feed the event-based processing algorithms: packaging the triggered events in event packages and sending them one-by-one as single events. These approaches suffer limitations from either processing overflow or lack of responsivity. Processing overflow is caused by high event generation rates when the algorithm cannot process all the events in real-time. Conversely, lack of responsivity happens in cases of low event generation rates when the event packages are sent at too low frequencies. This paper presents ASAP, an adaptive scheme to manage the event stream through variablesize packages that accommodate to the event package processing times. The experimental results show that ASAP is capable of feeding an asynchronous event-by-event clustering algorithm in a responsive and efficient manner and at the same time prevent overflow.

@inproceedings{tapia2020asap,

author={Tapia, Raul and Gómez Eguíluz, Augusto and Martínez-de Dios, José Ramiro and Ollero, Anibal},

booktitle={IEEE International Conference on Robotics and Automation. Workshop on Unconventional Sensors in Robotics},

title={ASAP: Adaptive Scheme for Asynchronous Processing of Event-Based Vision Algorithms},

year={2020},

month={May},

pages={1--3},

doi={10.5281/zenodo.3855412}

}

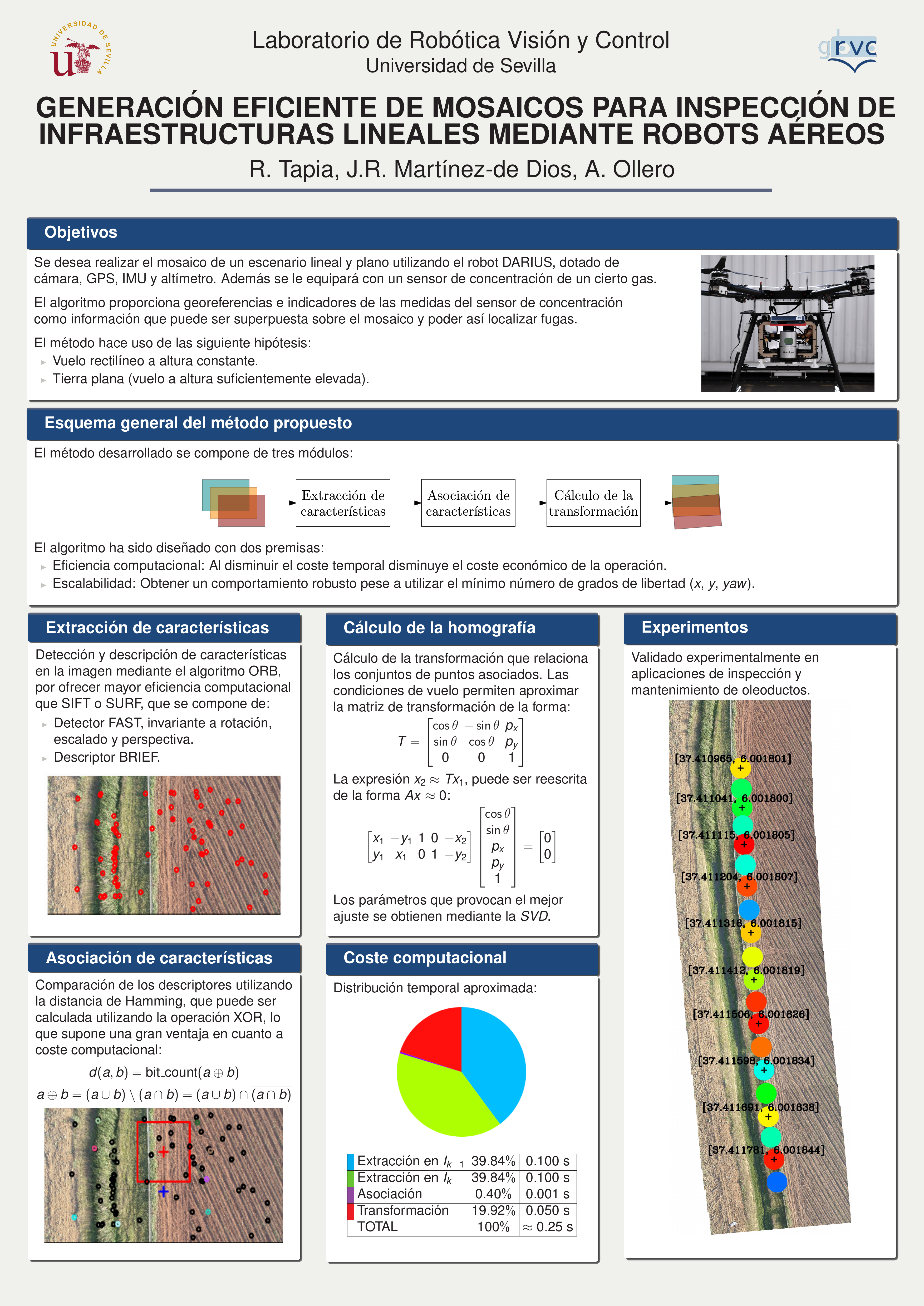

2019 | Efficient Mosaicking for Linear Infrastructure Inspection using Aerial Robots

Jornadas de Automática

CITE

PDF

POSTER

This paper presents a mosaicking generation method using images captured by aerial robots for linear infrastructure inspection applications. The method has been designed using the problem hypotheses in order to reduce its computational cost, keeping its precise and robust performance. Particularly, it uses the rectilinear flight hypothesis and the estimation of the displacement between consecutive images to select regions of interest avoiding to detect and match features in the whole image, also reducing the outliers and, therefore, simplifying the optimization for calculating the transformation between images. The method has been validated experimentally on gas pipeline inspection missions with aerial robots.

@inproceedings{tapia2019efficient,

author={Tapia, Raul and Martínez-de Dios, José Ramiro and Ollero, Anibal},

booktitle={Jornadas de Automática},

title={Efficient Mosaicking for Linear Infrastructure Inspection using Aerial Robots},

year={2019},

month={September},

pages={802--809},

doi={10.17979/spudc.9788497497169.802}

}